Most advice fails for a simple reason: it is built on only one source of knowledge.

Some rely on personal experience and anecdotes.

Others rely on studies and citations.

Others rely on clever theorizing.

Each of these, on its own, produces distorted conclusions—and bad advice.

At The Power Moves, we use a different standard.

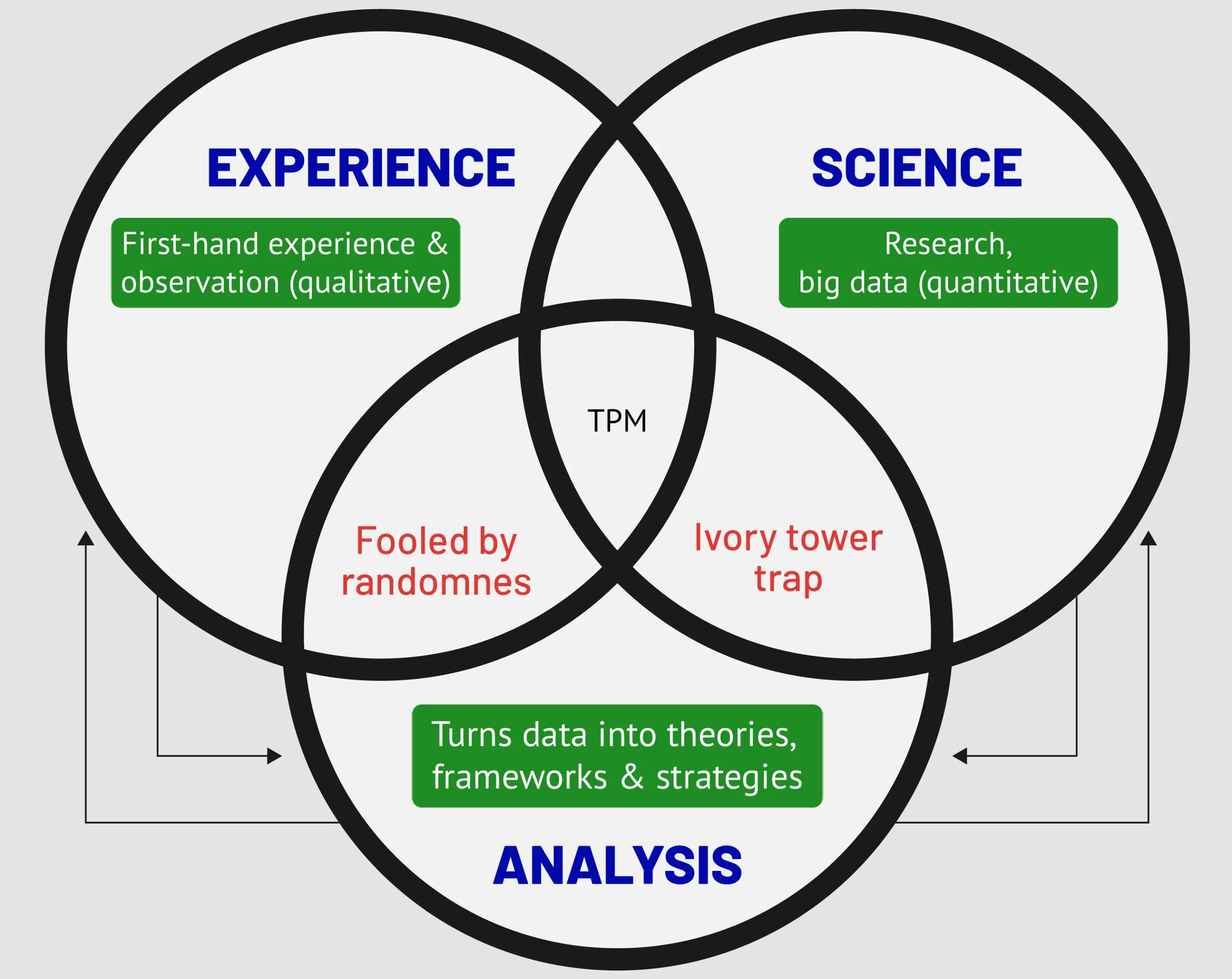

Every framework we teach is built on three pillars: first-hand experience, scientific evidence, and rigorous critical analysis.

This article explains that method in full—how the three pillars work, where each one fails on its own, and why only their combination produces reliable theories, repeatable strategies, and real-world results.

TPM 3-Pillars of Knowledge™

A practical framework TPM uses to build reliable, repeatable strategies: it requires (1) first-hand experience; (2) careful use of scientific evidence and data; and (3) rigorous critical analysis that falsifies, synthesizes, and turns findings into usable systems. Applied together, the three pillars produce theories and tactics that work across contexts.

Contents

Overview

- Experience

- First-hand experience

- Observation from others

- Science

- Data: the quantitative results of research

- Scientific method

- Critical analysis

- Logic: ensures reasoning is valid

- Critical thinking: meta-level analysis (analysis of analysis), ensuring the analysis remains logical and grounded in data

- Pattern recognition: Connects the dots, building ‘bridges of meaning’ among them. The more you connect, the better the theories

- Systemic thinking: the ability to think and observe at a higher level of abstraction (Gallon, 2009)

The final products are:

- Theories, Mental Models, & Systems that explain reality, and allow for predictions.

- General principles

- Strategies & techniques to achieve outcomes

- Real-world outcomes from applying principles and strategies

This is the process we also use for Power University.

1. Experience

Experience includes everything that one sees, does, or experiences oneself.

There are two levels of first-hand experience:

1.2. First-Hand Experience

Not all personal experiences are created equal.

For example, “experience” in workplace politics after 30 years doing the same job, without improvement or upward movement, is not the best type of experience to inform on how to progress at work.

In general, strong experience is:

- Successful experience: for example, in social skills, high status attainment

- Repeated success: for example, repeated across different social groups

- Repeated successes in different contexts, with different personalities and cultures

1.3. Observation

Observation of others allows us to increase data points.

The good social researcher observes others and seeks possible causal links between others’ behaviors, mindsets, and results.

Observation Added Value

Observation complements first-hand experience learning from:

- Different people in ways that we could not simply test by changing our behavior

- Outliers

- Deeper scrutiny from public courts or investigations (information people wouldn’t otherwise share)

- Negative examples that we may not want to repeat ourselves

How to Expand Experience

Some tips to learn from personal experience:

- Try different things: different activities, in different contexts, with different personalities

- Reflect outcomes, and the link between action and results

- Ask yourself “what if”, “what could I have done… “

- Broaden experience with feedback: it can point to blind spots

- 🙋🏼♂️ Lucio’s note: I ask deep probing questions to my dates, and it provided invaluable insights into dating dynamics

Advantages of Experience

Experience is not always and necessarily the first pillar of any discipline, but it is the main pillar for TPM-related disciplines.

Among the advantages of experience:

Reliable if based on repeated success

Repeated results can provide the best data and insights to tease out the best strategies for achieving outcomes.

However, there are important caveats:

- Generalizability: results might not necessarily be generalizable to different personalities and contexts

- Good analysis is needed, especially so if one wants to generalize them to different environments, and to different individuals.

Remember that successful teachers with poor analytical skills rarely make for great teachers.

High-quality data for pattern recognition

The deeper and broader the experience, the more patterns we can recognize.

Highest-quality data points for social intelligence

While people differ in social and power intelligence at birth, experience is necessary for everyone to improve it.

Limitations of Experience

For all its importance, there are also important limitations to personal experience, including:

Some results cannot be reproduced many times over

While experiences like conversations and cold approaches can be repeated many times over, some others cannot.

In some endeavors, individual success is inversely proportional to quantity or breadth of experience.

For example, peak relationship success is to have one single relationship for life. And peak career is to become CEO of the first company one has joined, both limiting the breadth of experience.

This can be problematic for deriving generalizable principles.

Success may be due to randomness and chance

For example, if a guy teaches entrepreneurship after he’s had one successful business, it may be a case of ‘right time, right product, and luck’,

Critical thinking may help differentiate luck from effective mindsets and strategies.

Personal experience needs critical thinking for higher-level mastery

Personal experience without good analysis is limited by one’s own experience.

If one is unable to tease out general principles, they will fail as soon as their circumstances change.

Deep but narrow experience leads to one-trick ponies

In “Antifragile” Nassim Taleb says that trained fighters are poor street fighters because of their over-experience in a controlled environment.

I don’t necessarily agree with the example, but he raises a valid point. There is an inverse relation between contextual uniqueness and transferability of results.

Personal experience is limited by individual uniqueness

In general:

The results of the specimen do not generalize to the species, and his strategies for success do not necessarily apply to any other random specimen

For example, a handsome and wealthy man teaching seduction may not be very helpful to less attractive men.

2. Science

Includes:

- Studies, experiments, and surveys

- Big data analyses

- Tracking data (analytics software)

As well as:

- Scientific method and scientifically-informed thinking

In social skills, science’s data points can confirm or disprove the patterns seen in personal experiences.

In the case of confirmation, one can rest safer about the validity and reproducibility of his experience. If scientific data instead disproves one’s own experience, then he knows he must dig deeper.

Science Added Value

Scientific data improves our understanding with:

Mitigates the risks of inductive reasoning (Fooled by Randomness)

Science adds quantity and diversity of data that allows more confident generalizations of principles.

I often see it as a red flag when people are too self-confident based on limited experience.

It’s a case of inductive reasoning, or going from one case -or a small number of cases-, to the general rule.

High quantity & quality of data

Data and surveys can provide quantity and diversity of data points that experience alone may struggle to match.

For example, it would be hard to estimate, say, the average sexual partner in a specific country just by asking around.

And yet, that’s very important information to assess the sexual dynamics of a given sexual marketplace (on average: high partner count means easier access to casual sex, higher incidence of cheating, and fewer life-lasting marriages).

Scientific data is also more structured, in contrast to personal experience, which is most often unrecorded, injecting new types of biases (e.g., recency effect & salience effect). It allows for more granular data analyses (e.g.: subgroups of religious VS non-religious women, and cohorts to track changes over time).

Experiments can set up contexts one can hardly replicate in real life

This goes both ways: some real-life situations are difficult to replicate in the lab. But some lab experiments can be challenging to experience in real life.

Take for example the Milgram experiment on obedience: very few could experience a similar scenario in real life.

Science can isolate variables

Studies can help isolate single variables, re-run the experiment with different manipulations, and provide a more accurate measurement.

For example, measuring the effect of “priming” on persuasion and behavioral change is nearly impossible in real life.

But a series of smart experiments can find out whether -and how well- priming actually works.

Limitations of Science

Recent populist movements aside, our world reveres science.

That’s a great thing, and well deserved. Science is a true engine of progress.

However, “revering” anything is actually unscientific.

Proper science includes knowledge and awareness of the limits of science and, even more, of the limits of a specific paper, experiment, or survey.

This is all the truer in the social sciences, where replicating and measuring dynamics of influence, power dynamics, or social strategies is particularly challenging.

Some of the limitations of science include:

Research-specific limitations

Poorly-designed or low-powered studies can sometimes be actively misleading.

The examples are too many to count, from priming to power poses, and it prompted a period of soul-searching in psychology (and some skepticism that at times was an overreaction).

Critical thinking helps: looking at methodology, population size, and possible confounding variables helps put individual studies in perspective.

In social sciences, some research applications to the real world can be dubious (external validity)

Some studies can be perfectly executed, and still not provide great insights about real-world dynamics.

Psychologist Martin Seligman says that external validity is one of the banes of psychology research:

This is the “white rats and college sophomore” issue: researchers can control and measure what rats and sophomores do in the laboratory, but any finding’s application to real human problems is always a strain

And he adds that “public doubts about the applicability of basic, rigorous science are often warranted, and this is because the rules of external validity are not clear“.

Critical thinking, supported by personal experience, can help separate scientific signal from scientific noise.

Many false positives & false negatives for complex dynamics

I’ll give you an example:

Betsy Prioleau in “Swoon” says that women prefer men with feminine faces.

Indeed, there are plenty of studies that suggest that women prefer more feminine faces. But there are also plenty of studies that seem to suggest that women prefer more masculine faces.

This is normal.

Investigating complex issues with high individual variance often leads to studies reaching opposite conclusions.

Meta-analyses may help, but they’re not enough.

Critical thinking must step in with hypotheses to be tested with ad-hoc studies that isolate variables (eg.: high-value women’s preferences, risky vs. safe environments, women’s age, etc. etc.).

Experience helps.

For example, a man hypothesizes that younger women tend to prefer more feminine faces based on boyband fandom, and that may inform future research, or how to assess existing studies.

Science Power Dynamics & Incentives Don’t Always Align With Truth

Just like any other human, scientists also have personal biases, and may place personal success above ‘truth’.

Some of the issues with published research:

- The full published literature is inherently biased towards larger effect sizes because

- Publishers are more likely to publish largely significant or shocking results rather than mild, confirming, or “null” results

- Researchers may prefer results that are most likely to be published, with an incentive to “massage” the data (“p-hacking”)

- Fraud, or wholly made-up data

See for example the cases of Francesca Gino and the Stanford Scandal - Seek personal fame, publishing too early, fudging, or over-selling smallish (and little effective) findings.

For example, Amy Cuddy/power poses, Angela Duckworth/”grit”- Self-help distortions: Although not a criticism of science, even valid constructs like vulnerability or “growth mindset” can turn ineffective when misapplied

A good chunk of pre-replication crisis results are overstated or misleading

Incentives may have been particularly badly misaligned before the replication crisis.

And researchers had much more freedom and leeway to “torture the data” until the data looked ‘good’.

3. Critical Analysis

Critical analysis turns all the available data into theories and principles.

Good critical analysis could be boiled and simplified as “thinking well”.

🙋♂️Lucio’s Take: The secret sauce to TPM’s uniqueness?

Lucio:

I used to think that The Power Moves’ popularity came from combining experience, with science.

Today I think differently.

Although few do it well, many creators talk about personal experience and add some citations. So today I believe that the true added value of this website is in the critical analysis.

Critical analysis includes:

- Logic

- Skepticism and ‘healthy cynicism‘ to consider possible personal biases

- Falsification / critical refutation, or considering why a theory may not be true <— 🙋🏼♂️ Lucio’s note: It’s something I do naturally, and that I believe is very useful, and something I wish to see more of

- Systems thinking, looking for patterns to turn data into coherent theories and principles

- Practical application from theories

Critical Analysis Added Value

Solid critical analyses allow for:

Critical Analysis Turns Data Into Predictive Theories

Predictive theories provide the theoretical infrastructure to understand the links among different phenomena.

For example, evolutionary theory of male parental investment allows us to understand why women are more selective than men, but why men are also selective for long-term relationships, and more selective than most other males in the animal kingdom.

Critical Analysis Turns Theories Into Strategies

To achieve real-world outcomes.

Critical analysis troubleshoots inconsistencies

Inconsistencies can arise between different experiences at different times, or between experience and scientific data.

Critical analysis provides the first test of whether inconsistencies weaken, undo, or even reinforce a theory.

For example, some men’s attraction for very old women on paper could undo evolutionary psychology. However, critical analysis would look at how common it is, whether these men are also attracted to younger women, and provide first testable explanations that may even strengthen the general theory and principles.

Critical analysis tells if personal experience or science shall prevail

When science and experience diverge, critical thinking can tell what to assign more weight to.

For example, imagine in a survey 95% of men say they have more power in their relationship, 63% of the women confirm, but your personal observation suggests otherwise.

In this case, an experienced observer may be right and good analysis may explain why.

Men and women both fall for social desirability bias, and men for ego self-protection as well.

And they both focus on the ‘official’ leadership role, which is often a front, instead of what most often moves the needle: who has more emotional influence over the other.

Conversely, data beats personal experience when the data is highly reliable. For example, in a salespage A/B test with reliable data.

How to Improve Critical Analysis

Keep A Constant Feedback Loop Between Experience & Theory

Incorporate the latest experience into your general theory and principles.

Matching experience reinforces the theory, while mismatching experience provides opportunity for refining the theory (and continuous mismatching experience for scrapping the theory).

Let Go of Biases

You can either learn the truth and, in turn, develop effective strategies to win, or you can hold to your biases.

Analyze Feedback Non-defensively

You may dislike someone or someone’s feedback.

But before dismissing it, ask yourself if there may be anything true in what they said.

Strike A Balance Between Open Mind & Brains Falling Out

For example, I consider the “law of attraction” in the sense that you can “manifest” things not true and unworthy of my investigative time.

I still keep a small window open to the possibility that it might be true, but don’t waste time investigating it or researching it.

Never Stray Too Far From The Data

Good analysis limits itself.

Good analysis must stay close to the data: the farther analysis strays from data and experience, the more speculative it becomes.

And the farther it strays from good data, the more prone it becomes to being twisted by personal biases.

Just like a smart swimmer keeps an eye on the coastline to ensure he can always swim back, good critical thinking never strays too far off experience and data.

Falsify Your Own Theories: Red Team

Referring to entrepreneurship, Jay Samit says that the best ideas are “zombie ideas: they survived a thousands attack and aren’t as good looking anymore, but they showed survivability.

Theories are the same, and asking ‘why it’s not true’ is often more important asking ‘why it’s true’.

Read & Learn From Critical Thinkers

And you’ll naturally move closer to their way of thinking.

For more, also read:

- Critical thinking analysis of news

- Critical thinking analysis of an ad

- Critical thinking against journalist’s games

Limitations of Analysis

The effectiveness of critical analysis depends wholly on the quality of that analysis.

Poor analysis may be worse than no analysis at all because it leads to wrong theories, which in turn lead to wrong predictions and strategies.

And a bad strategy is worse than a purely random strategy.

So here are some limitations to be aware of:

- Good analysis needs good data points, as per the ‘garbage in, garbage out’ principle. Great thinkers with little experience and data do not make for good theories

- Good analysis demands absence of personal biases, and although it may sound obvious, unbiased analysts are a tiny minority.

- Biased good thinkers are the worst, since they are better able to fool themselves and others

Avoid a teachers with poor critical thinking

Reliable signs of poor thinking include 1) over-generalizations; 2) little nuances and exceptions; 3) generic ‘laws’ with little notes on how to calibrate them

End Product: Systems

The end goal is developing higher-level theories that explain reality.

For our practical goals, we then extend to effective strategies for replicable results across different contexts and personalities.

A good system empowers you to make predictions and strategize effectively to achieve goals.

You can:

- Recognize patterns: for example, quickly draw conclusions about one’s character, or about the social and power dynamics of any new situation

- Superior information handling, make connections & prediction: you categorize incoming information to start forming a larger picture, and can then make connections from disclosed information and infer undisclosed information

- Respond effectively to achieve goals as you can predict what certain actions will lead to

Systems Open The Doors To All Sub-Systems

In many disciplines, different sub-systems are connected.

For example, social skills’ main systems include human nature, social exchanges, and interpersonal power dynamics.

Understand those, and you understand most socialization, whether it’s in relationships, business, or friendships.

TIP: For Mentors, Pick Good Thinkers Who Understand The Dynamics:

Pick not just people who are good at doing something, but people with a deep grasp of the underlying dynamics. These people can teach you how to apply general principles to different people, in different situations.

Systems Allow For Real-World Outcomes

Many researchers stop at the theoretical level, without providing advice.

However, as a platform for male agency, we turn theories into strategies to help men achieve goals.

And the better the model, the better the strategies, including higher granularity and contextual adaptability.

Failures of The 3-Pillars Approach

The law of balance also applies to epistemology, and the three pillars need to be in balance.

Any excess in one of them without being balanced by the others leads to distortions and, ultimately, to poorer theories and strategies.

The failures are:

- All science / empirical data, lacks real-world testing. Lacks an overarching theory. Can’t offer any guidance on novel situations outside of the specific test conditions it relies on

- All experience, fails to systematize and replicate for others. Struggles to self-correct and improve as well

- All analysis, fails to ever test its theories, likely leads to heavy theorizing that doesn’t produce results

Intersecting 2 pillars with each other improves the outcomes.

But still not ideal.

Let’s see some examples of unbalanced pillars:

Little Data, Much Analysis: Freud

- Personal experience: high

- Science: lowish

- Analysis: very high

- Critical thinking: low (no falsification, little scientific approach)

Freud’s experience came from his practice, and on top of clinical samples being inherently biased, the data weren’t quantified and systematized.

Freud focused more on theorizing, and didn’t seem keen on falsifying his theories.

The results are telling: despite being a genius with groundbreaking insights, large swaths of his theories are now discredited.

Says for example Seligman (Seligman, 2002):

The events of childhood are overrated (in Freudian psychoanalysis).

Marting Seligman

It turned out to be difficult to find even small effects of childhood events on adult personality, and there is no evidence at all of large—to say nothing of determining—effects.

This means that the promissory note that Freud wrote about childhood events determining the course of adult lives is worthless.

This is a good cautionary tale: if you stray too far away from empirical evidence, you’re almost bound to get out of touch with reality and build “castles in the sky”.

Self-refential theories worsen over time because they build upon themselves over increasingly poor foundations. The data points are swapped for hypotheses until most hypotheses stand on no data at all.

The odds of mistaken theories and constructs increase exponentially.

Bad data points (poor bricks) and bad data analysis (poor construction) make for bad theories (poor buildings)

Freud’s castles in the skies sounded coherent. But without grounding data, it was philosophy more than science.

Much Experience, Little Data & Analysis: Tucker Max

- Personal experience: very high

- Science: low

- Analysis: medium

- Critical thinking: low

Tucker Max was a ‘frat boy’, who slept around with an ‘asshole style‘.

Tucker has lots of first-hand experience: after he became famous, he allegedly had more than one thousand sexual partners.

Yet, his experience is limited to:

- University

- Asshole style

- Fame-based dating

His experience is extensive in depth, but limited in breadth.

Tucker would be a poor teacher for most guys who are not interested in ‘asshole game’, who are not frat boys, and who are not famous.

Much Science & Analysis, Poor Critical Thinking: Homo Economicus

- Personal experience: low

- Science: very high

- Analysis: high

- Critical thinking: very low

Early game theorists and economists developed theories and mathematical models around an ideal of full human rationality that barely exists in real life.

Says psychologist Richard Thaler, on the limitations of the “homo economicus” theories:

As economists became more mathematically sophisticated, the people they described evolved as well.

Thaler, “Misbehaving“

(…)

Calculate the present value of social security benefits that will start 20 years from now? No problem!

Stop by the tavern on payday and spend intended for food? Never!

It shows:

- Science getting out of touch with personal experience

- Analysis building upon itself unchecked by critical thinking

Data and science had internal validity, but didn’t accurately describe reality.

Proper critical thinking should have leveraged experience and said: “wait a second, do the people I see day around me act like perfect rational creatures? Actually, they don’t… “

The Power Moves Approach

Three-pillars, or nothing

Here at TPM we seek to only tackle topics where we have all 3 pillars in place.

Some exceptions apply whenever I feel we can add value.

In some fields, not all 3 sources are available.

For example, there is limited scientific guidance on manipulation dynamics, or best career strategies. And I exited the corporate life soon-ish (albeit with upward mobility, large breadth of experience, and deep observation).

But since most mainstream advice is full of platitudes, TPM can add value.

Finally, much of interpersonal power dynamics as a discipline is not yet codified.

So it’s part of our job to break some new ground and systematize it.